regularization machine learning mastery

The regularization parameter in machine learning is λ and has the following features. Regularization in machine learning allows you to avoid overfitting your training model.

Better Deep Learning Pdf Better Deep Learning Train Faster Reduce Overfitting And Make Better Predictions Jason Brownlee I Disclaimer The Course Hero

This technique prevents the model from overfitting by adding extra information to it.

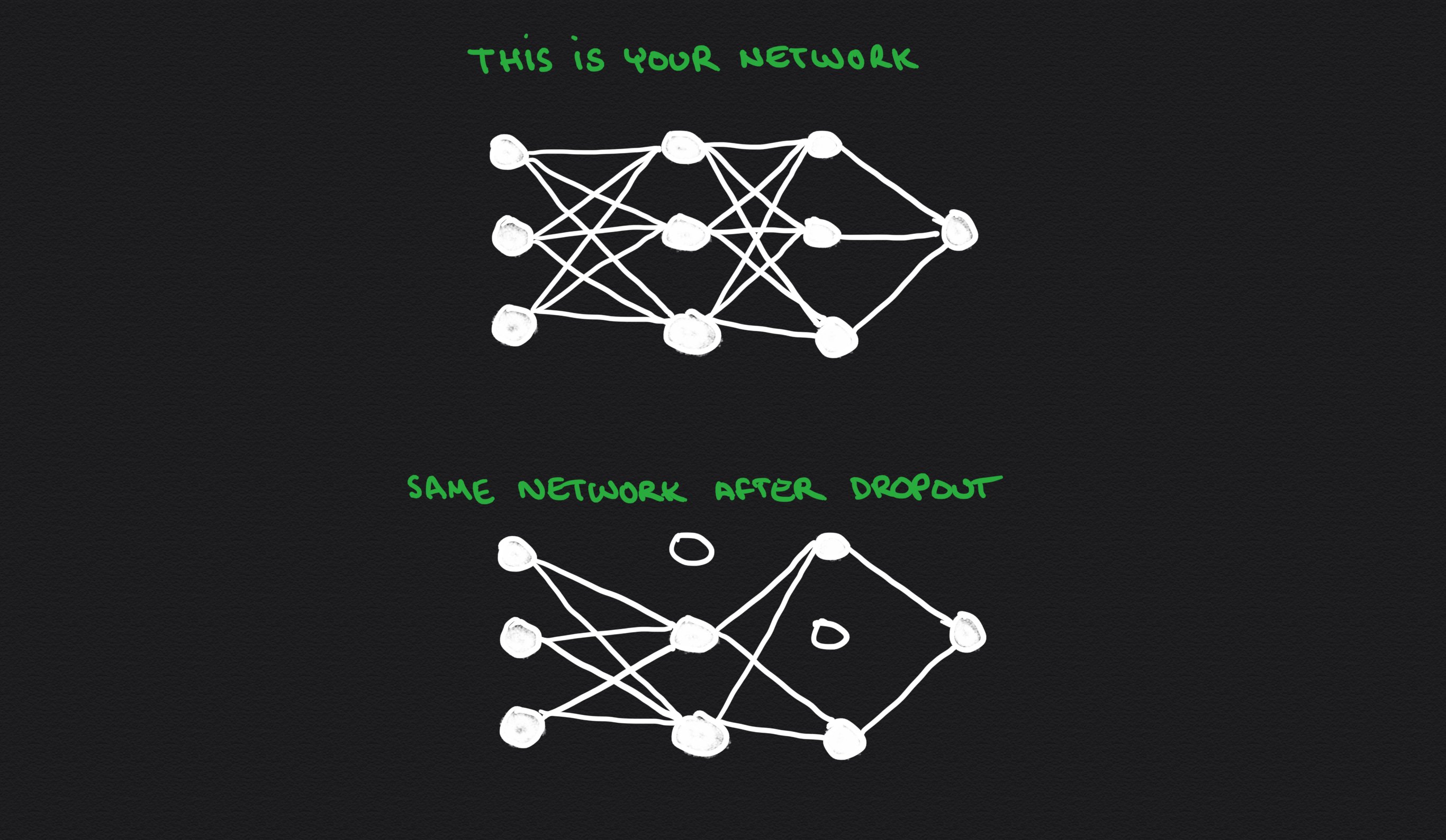

. Regularization in general penalizes the coefficients that cause the overfitting of the model. Dropout Regularization for Neural Networks. Regularization Loss Function Penalty.

There are three commonly used. In their 2014 paper Dropout. Part 1 deals with the theory.

Regularization is one of the basic and most important concept in the world of Machine Learning. A Simple Way to. Dropout is a regularization technique for neural network models proposed by Srivastava et al.

One of the techniques to overcome overfitting is Regularization. I have covered the entire concept in two parts. It tries to impose a higher penalty on the variable having higher values and hence it controls the.

Technically regularization avoids overfitting by adding a penalty to the models loss function. It is a form of regression. There are two norms.

In the context of machine learning regularization. This is exactly why we use it for applied machine learning. Based on the approach used to overcome overfitting we can classify the regularization techniques into three categories.

Lets consider the simple linear regression equation. Regularization works by adding a penalty or complexity term to the complex model. Types of Regularization.

This is an important theme in machine learning. In general regularization means to make things regular or acceptable. It is one of the most important concepts of machine learning.

Each regularization method is. Regularization is one of the techniques that is used to control overfitting in high flexibility models. Overfitting happens when your model captures the.

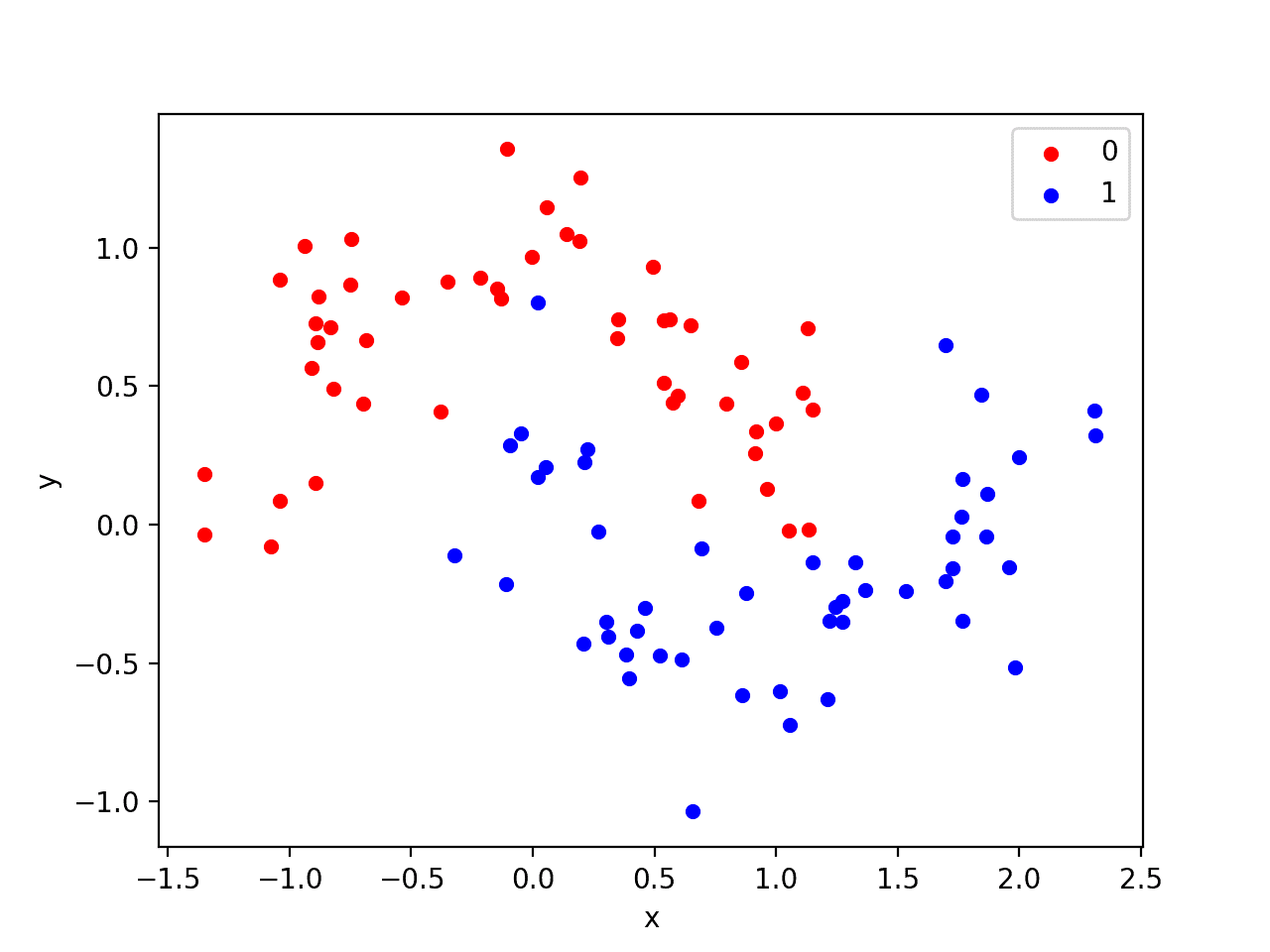

Regularization Dodges Overfitting. In Figure 4 the black line represents a model without Ridge regression applied and the red line represents a model with Ridge regression appliedNote how much smoother the red line is.

How To Use Weight Decay To Reduce Overfitting Of Neural Network In Keras

Machine Learning Prediction Models For In Hospital Mortality After Transcatheter Aortic Valve Replacement Sciencedirect

Use Weight Regularization To Reduce Overfitting Of Deep Learning Models

Machine Learning Ml Complete Guide Jc Chouinard

Santiago On Twitter Overfitting Sucks Here Are 7 Ways You Can Deal With Overfitting In Deep Learning Neural Networks Https T Co Hdafnkjfam Twitter

Regularization In Deep Learning

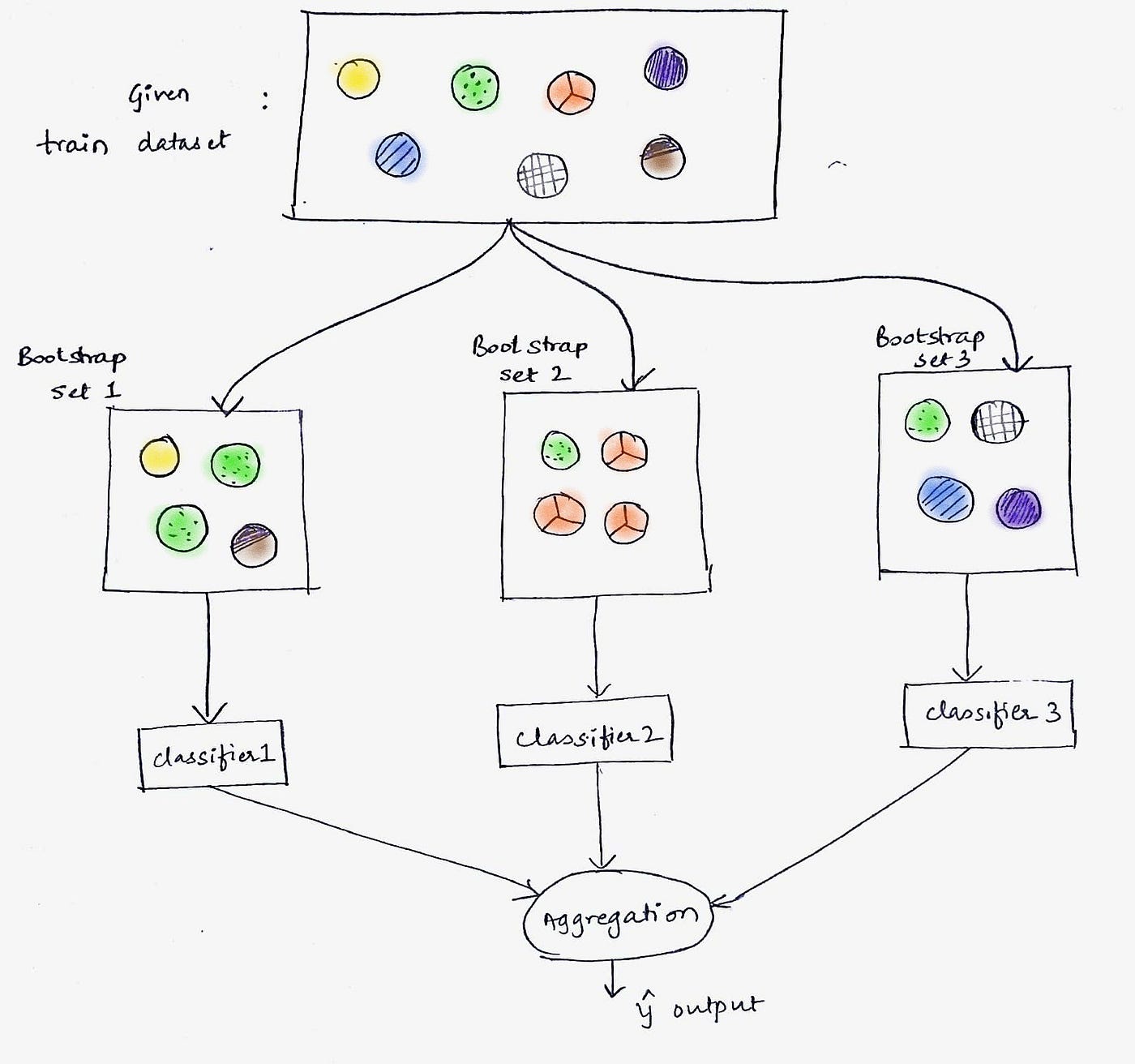

Random Forest Overview A Conceptual Overview Of The Random By Kurtis Pykes Towards Data Science

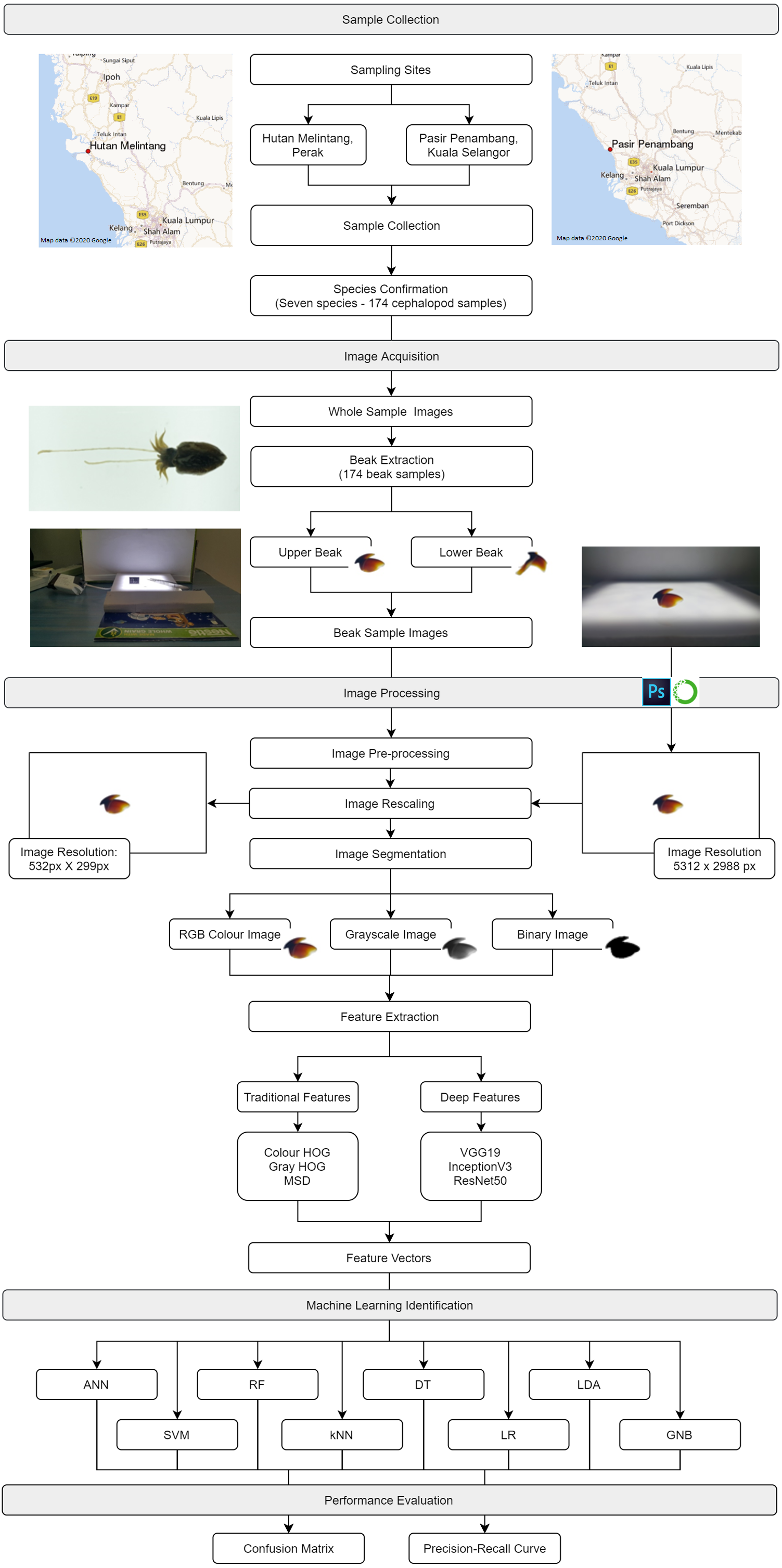

Cephalopod Species Identification Using Integrated Analysis Of Machine Learning And Deep Learning Approaches Peerj

Pdf Machine Learning Resource Guide Dadas Tching Academia Edu

Machine Learning Algorithm Ai Ml Analytics

Types Of Regularization In Machine Learning By Aqeel Anwar Towards Data Science

Addressing Diverse Petroleum Industry Problems Using Machine Learning Techniques Literary Methodology Spotlight On Predicting Well Integrity Failures Acs Omega

Fighting Overfitting With L1 Or L2 Regularization Which One Is Better Neptune Ai

What Is Activation In Convolutional Neural Networks Quora

Regularization Four Techniques Problem Solving By Mia Morton Medium

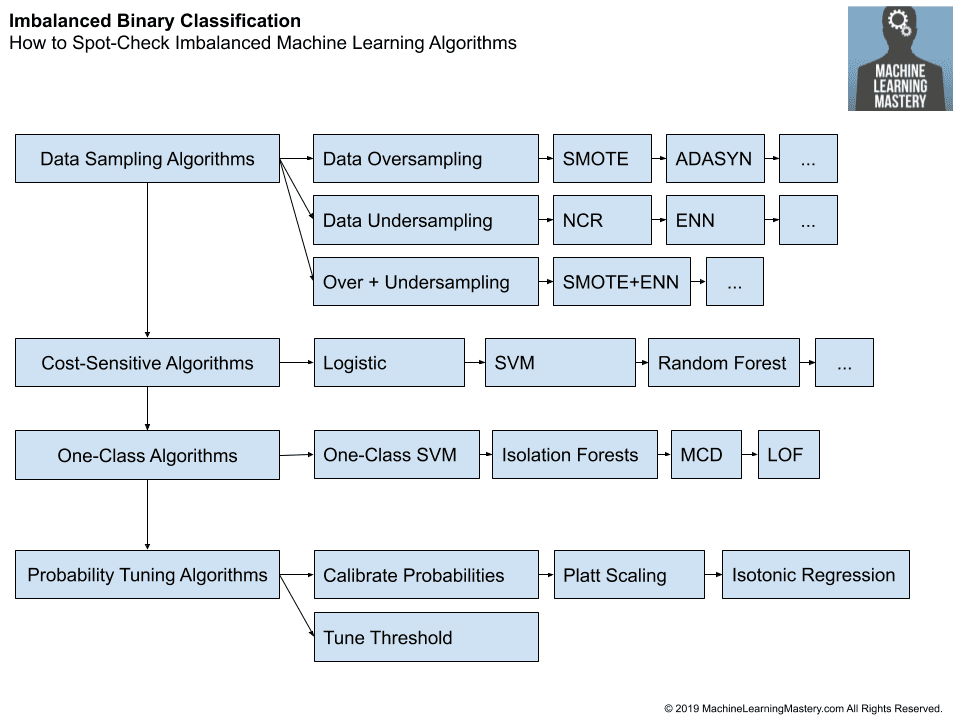

Step By Step Framework For Imbalanced Classification Projects